Lecture Capture, Exam Performance, and Attendance

Lecture capture is a relatively new technology that allows fully automated recording of classes. It usually involves a camera at the back of the classroom, a microphone on the professor’s lapel, and equipment that records what happens on the screen. The combined audio and video is then made available to students soon after the end of each lecture. I’m a big fan and have been using it for all my classes for more than two years now. My students love it, but it’s very hard to assess its causal impact on either attendance or performance.

Lecture capture has many obvious benefits. It allows students who must miss class an opportunity to catch up, and allows students to review material before exams. Students can watch lectures whereever and whenever is convenient, and adjust the speed of the lecture to match their needs. I have students who think lecture is too fast-paced that prefer to watch video so they can pause and rewatch the technical portions. Other students will put me on 1.5x or even 2x.

At the same time, students who choose to watch video instead of attending lecture cannot directly ask questions or participate in interactive activities, and there is more potential for distraction when watching from home relative to a lecture hall. My biggest worry is that students will justify skipping lecture by telling themselves that they will watch the video later but either never get around to it or binge-watch right before the exam.

To encourage students to stay current with the material I require them to take weekly, automatically graded, online quizzes. I also actively monitor a discussion board where students can post questions at any time.

This year, attendance in my econometrics class (120 students) started near 100% at the beginning of the semester but dropped to about 55% by the midterm exam. Last year the attendance pattern was very similar. The picture above only shows behavior up to the midterm exam, and the big spike in the blue (attendance) line you see at the end is the review session for that exam. I’ll be posting updated data at the end of the semester, but here’s a quick preview: Attendance has continued to drop and is now hovering between 25 and 30%.

The red line in the picture above is the fraction of the class that watched at least 50% of the recorded lecture. Many students watch a smaller portion, but I believe they are looking for particular topics as they review their notes or work on problem sets. After a short ramp-up, the watch rate has stablized at about 30%, though it is not always the same students watching video.

The green line is the sum of the two lines and while I don’t know for sure how many students both attended and watched any particular lecture, I believe the number is small and that the sum shown above is approximately the fraction of the class that either attended or watched. The spike in attendance at the review session looks like it was entirely students switching from watching to attending. This is not all that surprising since I announced in the previous lecture that they would spend most of the review session working through problems in small groups, and the payoff would be a lot higher for students who attended in person.

This semester 17% of my students were regular video watchers (at least 6 lectures) who attended few (less than 6) lectures. 60% were regular lecture attenders who watched few lectures, and 21% neither attended nor watched regularly. Just two students reported attending at least 6 lectures and watched at least 6.

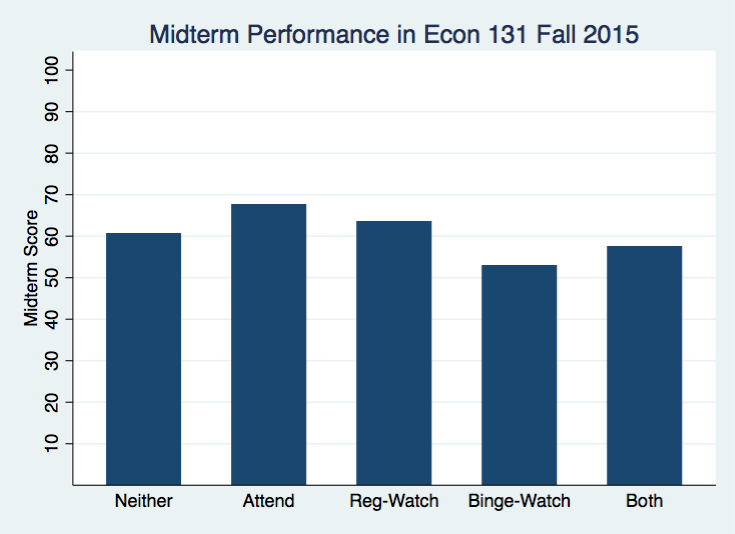

I reported last fall that the regular video watchers performed almost the same on the midterm exam as the regular lecture attenders, and both groups got higher scores than the students who did neither. This fall, I’m not seeing the same pattern. Instead, the regular watchers performed almost the same as the “neithers” and the attenders scored a statistically significant 7 points higher than either group.

This year, however, I’ve decided to break my 20 video watchers into two categories: Binge-watchers (n=9) are defined to be those students who watched at least 4 lectures for the first time during the week before the midterm exam. Reg-watchers (n=11) are those who also watched at least 6 lectures, but did not watch more than 3 for the first time during the last week. The reg-watchers scored almost 11 points higher than the binge-watchers, and even with just 20 observations the difference is almost statistically significant (p=0.11).

It is difficult to know what to make of this. At first blush, it makes me want to turn off the video recording (at least in the week before the exam) and try to force students to attend lecture. On the other hand, this is an econometrics class, and I spend half my time telling them that correlation does not equal causation. That is, the results above do not mean forcing students to come to lecture will increase their scores by an average of 7 points.

It’s quite possible that the majority of students who watch video are the students that are having the most trouble and they would do even worse if they had to attend class and did not have access to the video that they can pause and rewatch. This would be consistent with my highly unscientific conversations with students who watch video about why they do so.

To learn more, I see two potential routes. First, I could gather more data. Next year, at the beginning of the semester, I’m already planning to give a more detailed survey about students’ background and performance in other quantitative classes, and also give them an in-class pre-test of the material. Then I can see if the watchers really are the less-prepared students.

The second route is to start doing randomized experiments. There are many hardships on this road, not least of which is figuring out how to do this ethically since it could mean holding back access to video (or in person lectures) to some random subset of the class. Randomizing studying behavior (either quantity or quality) would be even harder. But if these hurdles can be cleared, interpretation of results would be straight-forward, and at this point, I could use a little straight-forward.