Total Failure: How not to use clickers to take attendance

I think clickers are a great way to get students actively engaged in a lecture class, and a pretty good way to learn whether students are learning what you’re teaching so you can do something about it immediately. This semester I wanted use them for a third purpose: Collect high quality data on who attended which lectures. It was a total failure.

I believe my students get a lot out of engaging with the material and each other during class, but I’m not sure it would be as valuable if they were forced to be there. Attendance in my classes is not mandatory–I want students in their seats because they believe it’s valuable. Also, it may be that students who skip class and watch it on video get just as much out of that experience. Even though there are serious issues with interpretation, I’d love to have better measures of the correlation between class attendance and class performance. In the past I’ve asked them how many lectures they attended on a pre-midterm survey, but I would rather have objective measures where students aren’t tempted to tell me what they think I want to hear.

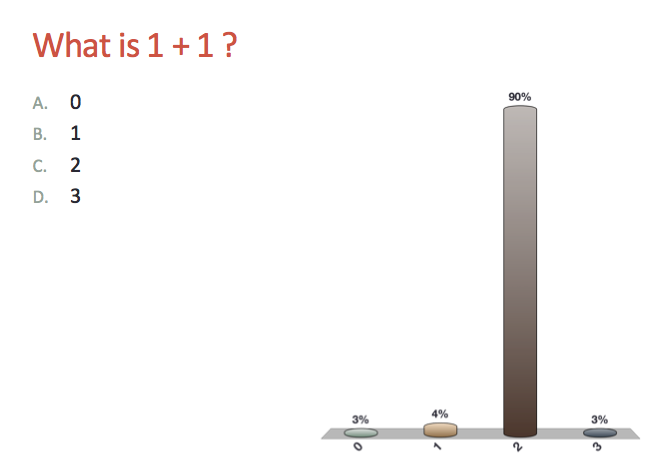

At the beginning of this semester I told my students they all had to check a clicker out of the library, register that clicker with their name, and bring it to class when they came to class. I told them how we would use the clickers and that I would keep track of attendance with them. I told my students that what they actually clicked would have absolutely no effect on their grade, and I started each class for the first few weeks with a simple question. I thought the first one was pretty easy:

But maybe this question isn’t quite as simple as it looks. If you add one positron to one electron, you get zero particles (and a fair bit of energy). If you add one rain drop to another rain drop you get one big rain drop. And sometimes when you add a man and a woman and wait a few months, you’ll get three people. Because answering this question with a clicker wasn’t universal, I asked an easier one at the beginning of the next class:

When participation didn’t match attendance again, I compared the clicker registration data to the class roster and emailed everyone who hadn’t registered politely asking them to get a clicker if they hadn’t already and register it. The following week, when participation still wasn’t reflective of attendance, I gave up and for the rest of the semester just used clickers as I had in the past: solely for engagement and feedback.

To be honest, I don’t know why it failed so miserably–On the last day of class my teaching assistant counted 40 students in the room and the highest number of clicks a question got was 18. I would be very interested in theories since knowing will help me decide what to do next. That said, I have a few ideas:

-

Make attendance (based on clicking or watching at least 50% of a video lecture) a small part of the grade. I worry that this might encourage some students to bring other students’ clickers with them to class.

-

Keep emailing them until everyone has a clicker registered. This won’t make people click once they get to class though.

-

At the beginning of each class have everyone hold up their clicker and manually get the names of everyone that doesn’t have one. Then make sure the number of clicks matches the number held up in the air. Hopefully after a couple rounds, very few people would show up without their clicker. I worry that this process will discourage some students from attending class.

At the end of every episode of the Teach Better Podcast we ask our guests to give an example of something they’ve tried in a class that failed. I firmly believe that the best teachers become the best by trying new things in their classes, and inevitably some of these ideas don’t work out. These failures are learning opportunities, and I’m hoping to eventually learn something from this experience. Any and all insights and ideas are welcome!